Screenshots (Counter-strike 1.6 and Cyberpunk 2077), video captures, LORA models, ComfyUI workflows, generated text, images, & video

California-Strike, ~ fps_max 16, Rendering…

My imaginary has always been, like American dreams and reality, very violent. A vivid field trip bus ride daydream vision, of tanks lobbing shells at each other across the 10 freeway, might still come to pass. Real flesh and blood conflicts spawn electromechanical ones, equally real, differently playful, which in turn throw off sparks for new wild firefighting.

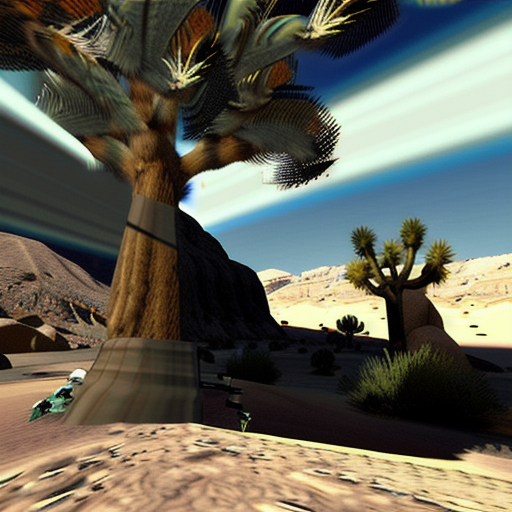

As a suburban LA teenager in the early 2000s, my life was playing the team-based competitive multiplayer first person shooter Counter-Strike in cybercafes with my friends. So I've invaded my favorite California places, hallucinated in the soup of silicon valley's stolen art, with the wrongly re-rendered remnants of these networked battleplaces.

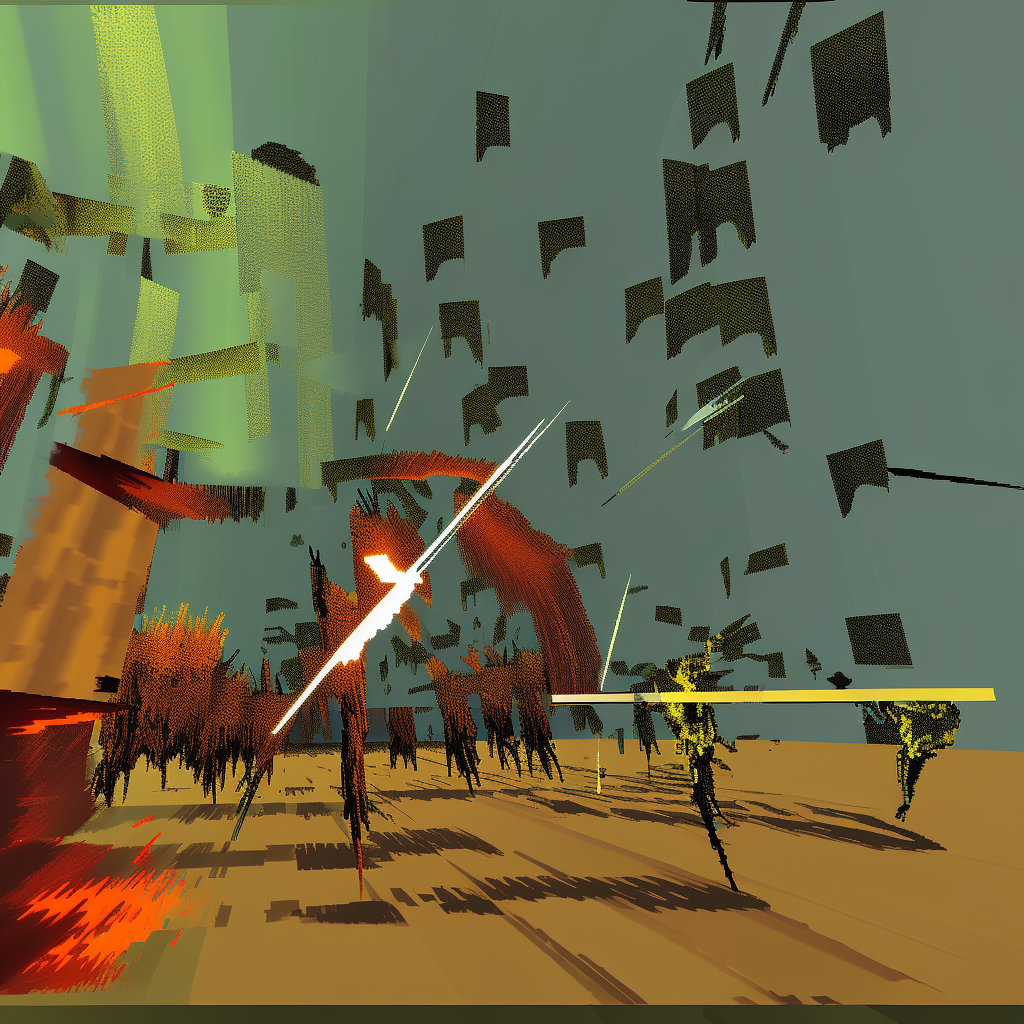

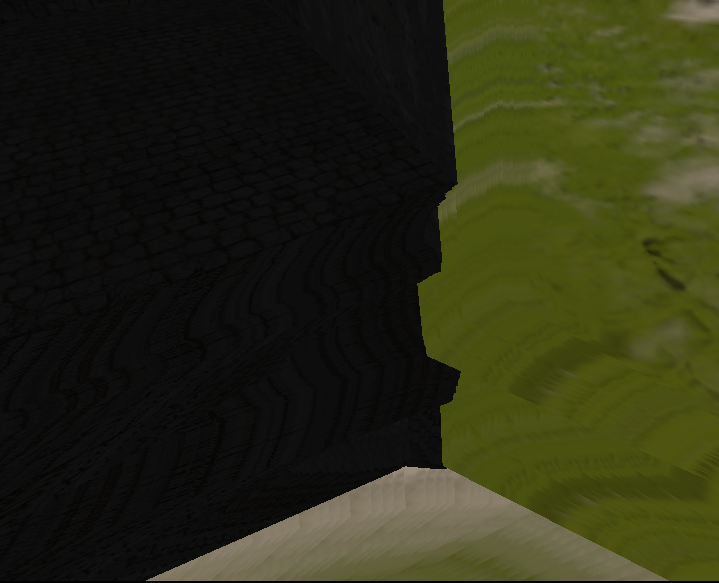

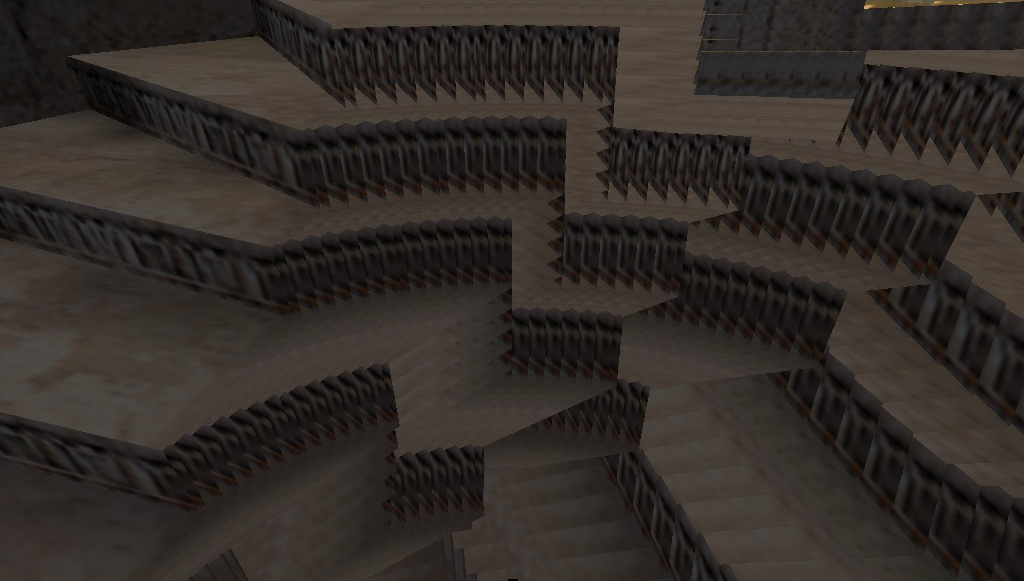

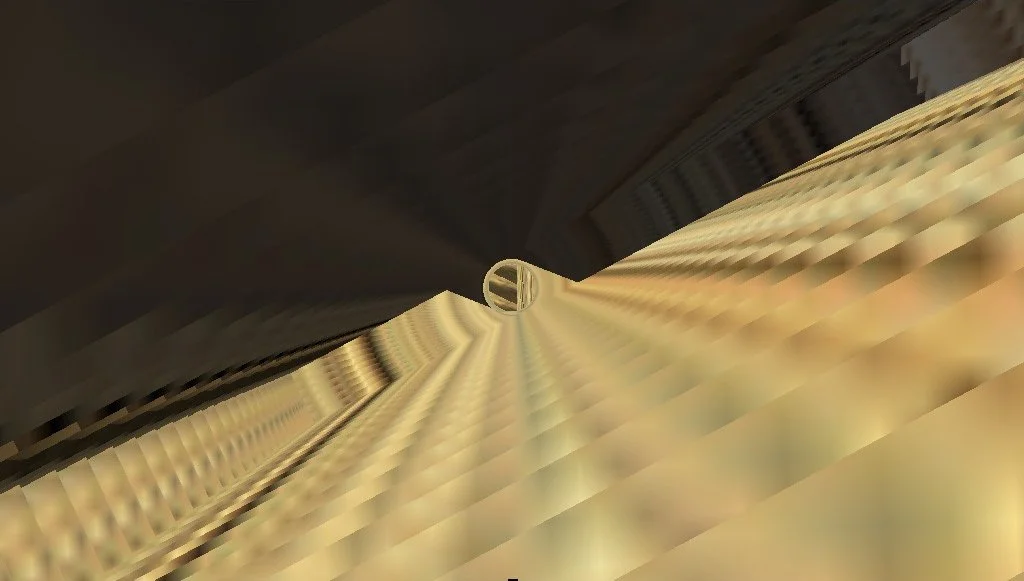

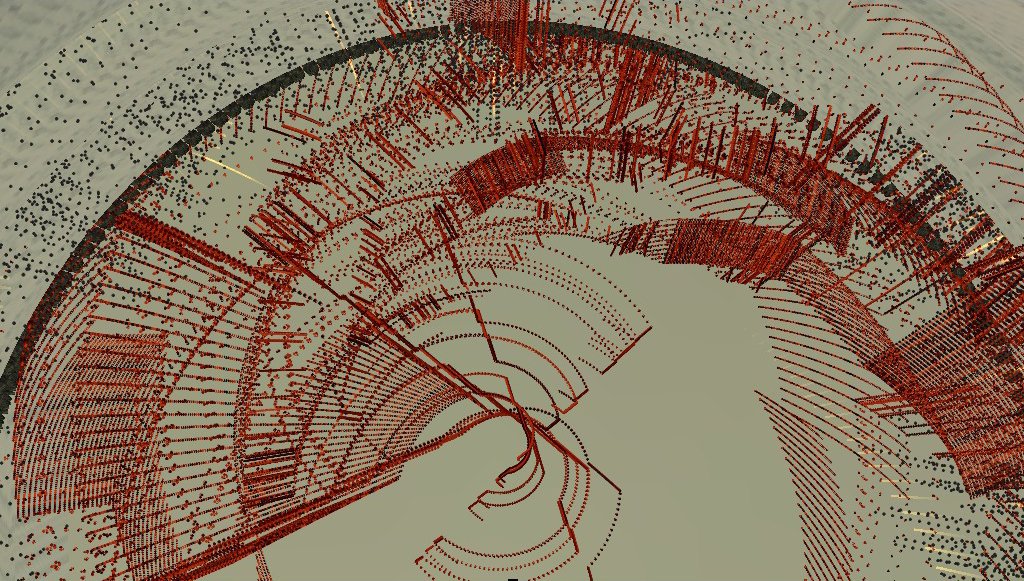

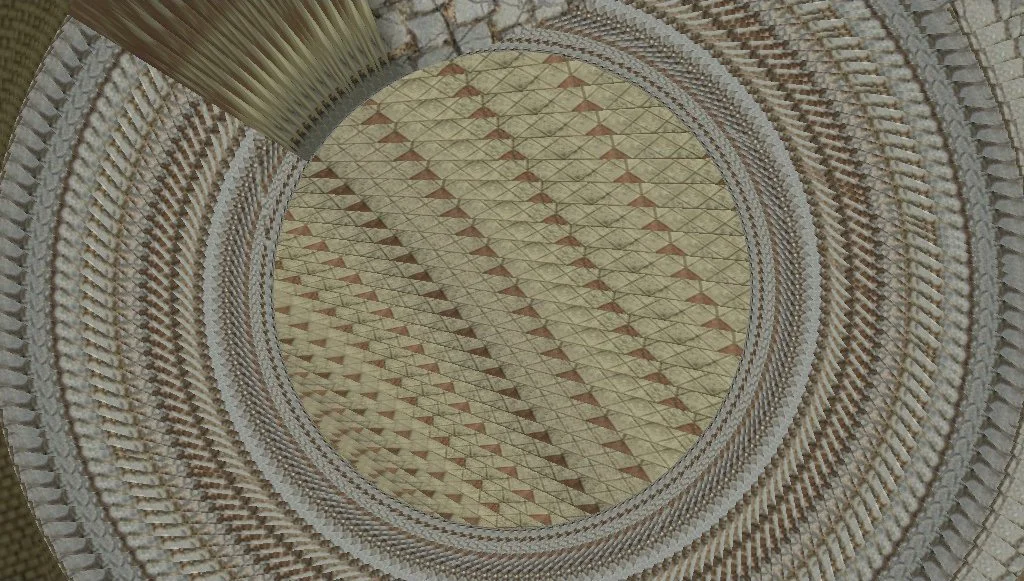

By sticking my virtual spectator camera partway through certain walls in a CS map at just the right angles, I exploit a visual bug in the game engine's renderer, freezing regions of pixels which would normally be redrawn at 100 frames per second, capturing the curving paths of players' bodies and blood in motion, along with sparking bullet impacts, grenades' explosions and smoke, and shell casings, or, by moving my mouse, painting patterns on the screen with the interior contours and textures of the levels themselves.

Screenshots and videos were captured both on live public multiplayer servers with real humans and in local servers I hosted alone or with player bots.

Finding the good spots to capture the action is a process of trial and error, intuition, map knowlege, and luck, sorta like being a sports photographer. If I move the camera too far or fast, the bug either ends or I overdraw and lose my captured traces. It requires skill and intimate familiarity with the game.

Then a bunch of fancy math happened using circuits and electrons to 'make an "AI" model' which rearranged more electrons to be rendered into images, which then served as input to another 'video model' to render a video which is now able to be displayed (also rendering) on your screens.

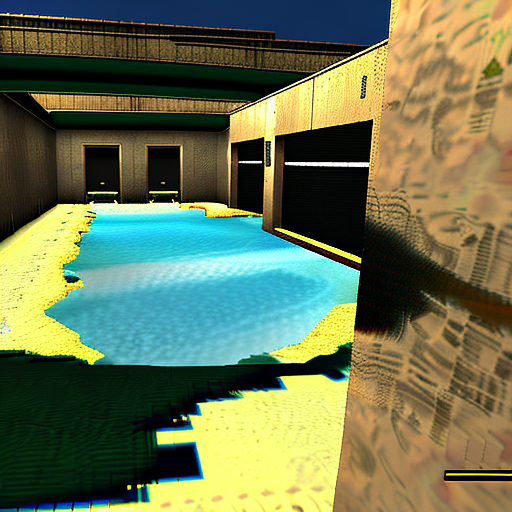

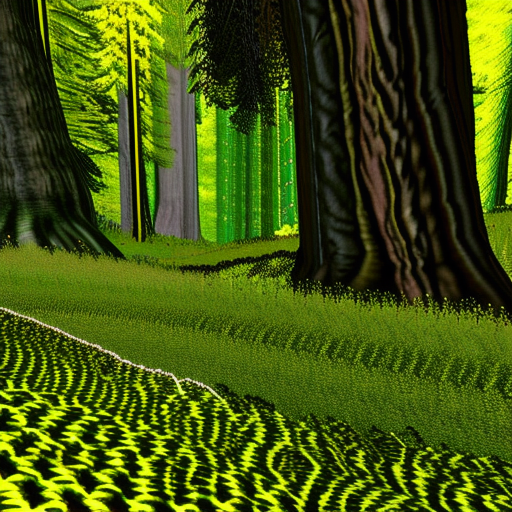

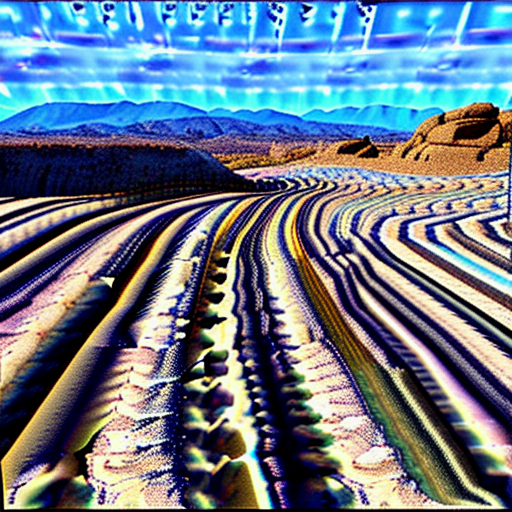

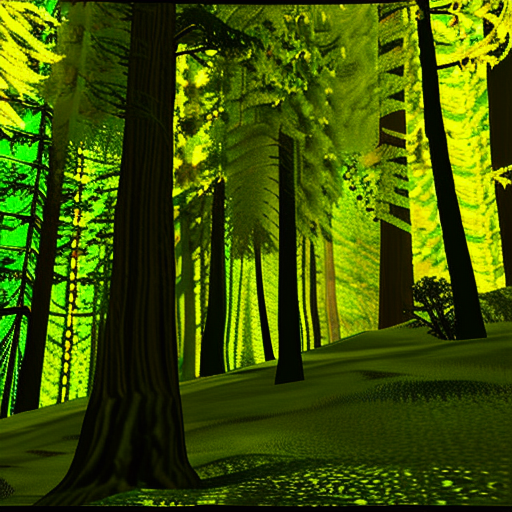

Images were generated from text prompts by picking randomly from a written list of my favorite California places, with a random weighting affecting the ratio between my LORA model and the standard image generation model, then fed into the image2video model as input, again with random weighting between my video LORA and the standard video model, this time with randomly generated text prompts picking from my written lists of possible combatants, reflecting the asymmetric warfare of Counter-Strike by including fascist militias, police, and military forces on one side and rebels, revolutionaries, and freedom fighters on the other.

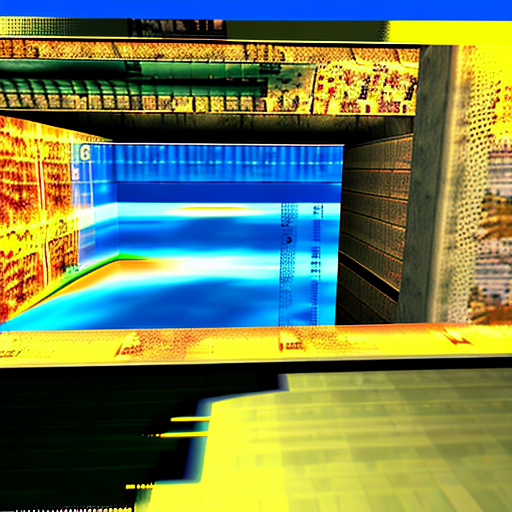

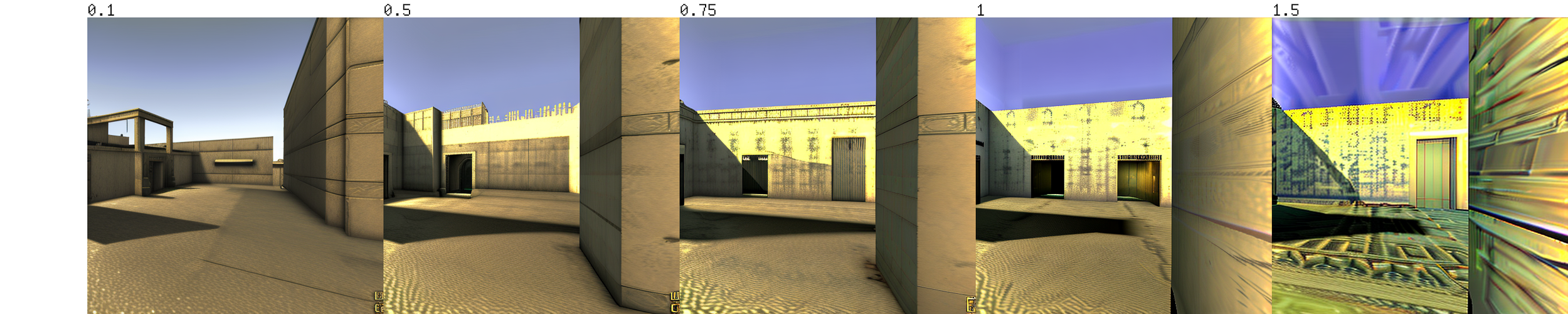

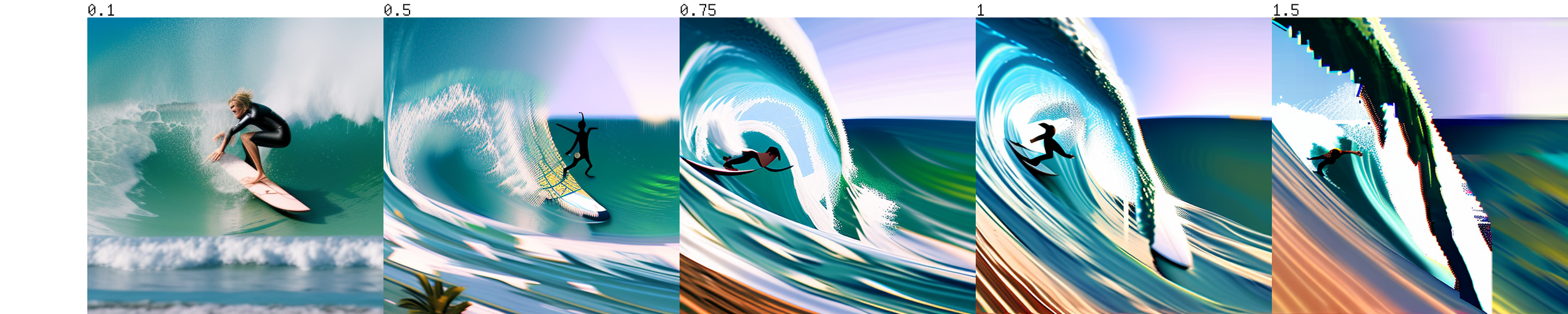

Grids generated showing the image output with different weightings between the standard image model and my LORA, higher numbers use more of my LORA and less of the standard model

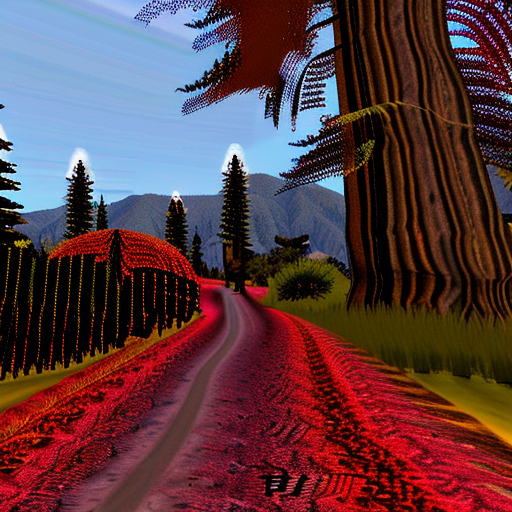

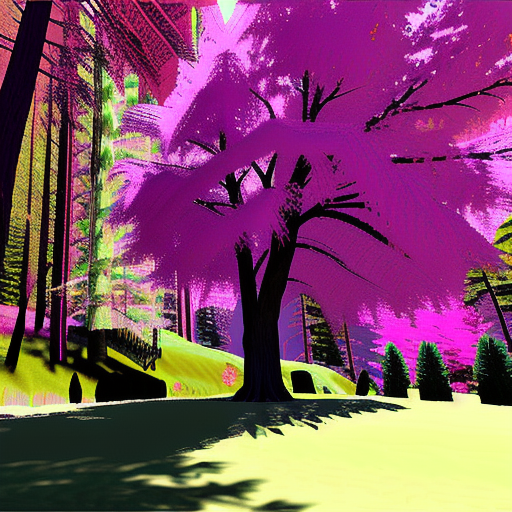

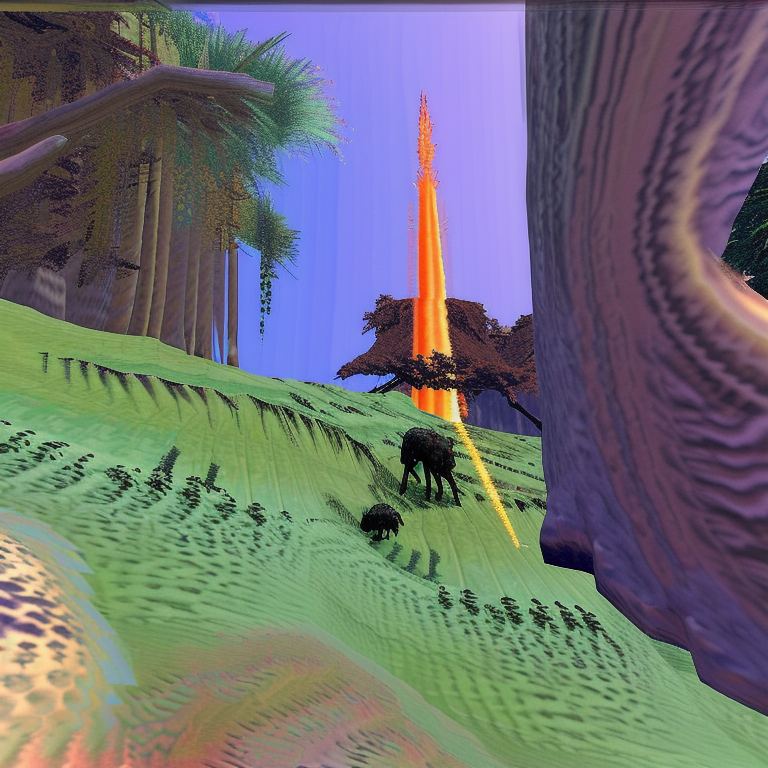

Images captured directly from the game that formed part of the training set for my LORA image model

Video illustrating how traces of player movement build up over time, collaged from a group of training set images captured during a single map (cs_scoutzknivez) of Counter-Strike play